- Published on

The Ins and Outs of Regression: Types, Training, and Evaluation

- Authors

- Name

- Parth Maniar

- @theteacoder

Regression is a type of supervised learning algorithm in the field of machine learning that is used to predict a continuous or real-valued output for a given input. In this blog, we will explore the ins and outs of regression, including the different types of regression algorithms, the techniques used to train these models, and the evaluation metrics used to assess their performance.

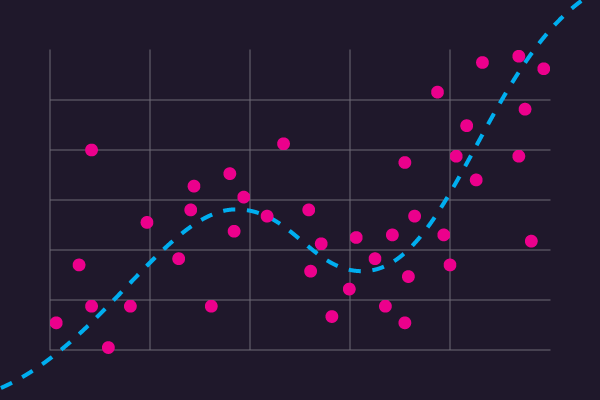

There are several different types of regression algorithms, each with its own strengths and limitations. The most commonly used regression algorithm is linear regression, which assumes that the relationship between the input and output variables is linear. This means that the output is a linear combination of the input variables, and can be modeled using a straight line. Linear regression is a simple and effective algorithm that is well-suited to data sets with a strong linear relationship between the input and output variables.

Another type of regression algorithm is logistic regression, which is used when the output variable is binary (i.e. it can only take on two possible values, such as 0 or 1). Logistic regression is used to predict the probability that a given input will result in a certain output, and is often used in classification tasks.

Non-linear regression, as the name suggests, is used when the relationship between the input and output variables is non-linear. This type of regression can be more complex and difficult to implement, but it is often more accurate than linear regression for certain types of data. Examples of data sets that might be suited to non-linear regression include data with a non-linear relationship between the input and output variables, or data with multiple input variables that interact in a non-linear way.

Regardless of the type of regression algorithm being used, it is important to carefully train the model in order to achieve good performance. There are several different techniques that can be used to train regression models, including gradient descent and stochastic gradient descent. These techniques involve optimizing the model's parameters in order to minimize the error between the predicted and actual output values. Other optimization algorithms that can be used include L-BFGS and conjugate gradient.

Once a regression model has been trained, it is important to evaluate its performance in order to determine how well it is able to make predictions. There are several evaluation metrics that can be used for this purpose, including mean absolute error, mean squared error, and root mean squared error. Each of these measures has its own advantages and disadvantages, and the appropriate metric will depend on the specific goals of the analysis and the nature of the data.

In summary, regression is a powerful and widely used tool in the field of machine learning. It is used to predict continuous or real-valued outputs for given inputs, and can be applied to a wide range of applications. Whether you are trying to predict stock prices, house prices, or the likelihood of a person developing a certain disease, regression algorithms can provide valuable insights and accurate predictions. By understanding the different types of regression, the techniques used to train these models, and the evaluation metrics used to assess their performance, you can unlock the full potential of regression and use it effectively in your own analyses.

Regression models are used in a wide range of applications to predict a continuous outcome. Here are a few examples:

Sales forecasting: Regression models can be used to predict future sales based on factors such as past sales data, marketing efforts, and economic indicators. For example, a company might use a linear regression model to predict future sales based on the number of marketing emails sent, the average customer spending per purchase, and the state of the economy.

Risk assessment: Regression models can be used to predict the likelihood of an event occurring, such as a customer defaulting on a loan or a patient developing a certain medical condition. For example, a bank might use a logistic regression model to predict the probability that a borrower will default on a loan based on their credit score, income, and debt-to-income ratio.

Quality control: Regression models can be used to predict the quality of a product based on factors such as raw materials and manufacturing processes. For example, a manufacturer might use a multiple linear regression model to predict the strength of a steel beam based on the chemical composition of the steel and the temperature at which it was cooled.

Environmental modeling: Regression models can be used to predict the effect of certain factors on the environment, such as the impact of greenhouse gas emissions on global temperatures. For example, a scientist might use a linear regression model to predict the increase in global temperatures over the next century based on the current level of greenhouse gas emissions and other factors.

Economics: Regression models are commonly used in economics to predict the relationship between variables, such as the relationship between a country's GDP and its unemployment rate. For example, an economist might use a linear regression model to predict the unemployment rate of a country based on its GDP, the rate of inflation, and other factors.

These are just a few examples of the many ways in which regression models are used.